Projects Float Their First Attempts at Meeting New Reporting Guidelines

by Robin Kipke

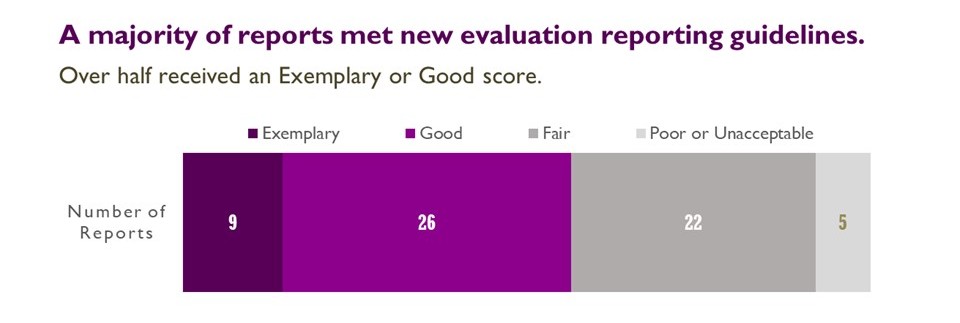

The first run of the grand experiment in more useful evaluation reporting is complete, and the results look promising. True, some reports have yet to find their sea legs, but a majority of them achieved relatively smooth sailing!

Reports could earn a maximum of 68 points for the following elements: Cover Page, Abstract, Aim and Outcome, Background, Evaluation Methods and Design, Implementation and Results, Conclusions and Recommendations and the Appendix. Reports earning 60-68 points were designated Exemplary – reports that others could look to as a model. Reports earning 51-59 points were rated as Good. Typically these contained sections that were really excellent and a few places where a few gaps existed. Reports in the Fair category, earning 34-50 points, often told part of the story but not clearly or thoroughly enough for readers to get a good sense of what was going on. Reports in the Poor category usually did such an inadequate job of detailing project activities that it was hard to follow how the objective moved forward. Reports earning an Unacceptable 0-16 points didn’t make much of an effort at all to communicate what the project was about.

As you might expect with new requirements being introduced toward the end of a work cycle, some elements were easier than other to incorporate into reports. People did a good job in earning full points for the cover page, stating the aim and outcome up front, and drawing conclusions about the objective and why things worked out the way they did. Out of 62 reports, 50/38/34 earned a perfect score for each of those components, respectively. Many writers also did a good job of managing the right amount of detail in the report versus the appendices, providing an overview of the evaluation components, and identifying the rationale for the objective. 33/31/27 reports earned perfect scores for that.

The areas that gave writers more trouble were: writing a succinct abstract, detailing any previous work done on the issue in the target area for the background section, articulating the utility of lessons learned or showing linkages between activities, and specifying the purpose, timing and scope of key intervention and evaluation activities.

However, the three elements where people scored the lowest had to do with displaying data effectively in the implementation and results section, demonstrating how findings were shared with different stakeholders throughout the work period, and explaining how the project attempted to tailor its approach and/or materials to different populations and audiences (cultural competency).

Despite this, project directors and writers liked the new format of the reports. In a TCEC survey of local lead agency project directors, evaluators and others involved in producing evaluation reports, out of 69 respondents, 52% said the new format helped their project describe how the project went about achieving the objective, 46% said it helped them assess strategies and tactics, and 49% communicate useful information to stakeholders. Just 10% said no to each of the questions and 38%, 43% and 41% said they weren’t sure. Their comments provided more insights on their views:

I liked the overall format. It is far better than the old version! I especially appreciated that the evaluation and implementation activities were integrated into a single chronological narrative.

I also loved the graphics and visual data tools provided by TCEC.

It seems to be much more reader friendly, both visually with the charts, and with amount and style of content. It is a document that I can easily share with our stakeholders, where before, I would not have given it to the general public…this is a readable report that partners will gain valuable insight with.

I liked focusing only on relevant evaluation data. I felt there was a switch to focusing more on the intervention activities which was valuable but much more time consuming to report on.

What to do differently

For many staff, this round of report writing was their first experience in documenting tobacco control activities. It’s one thing to have a set of guidelines and even an example report; it’s another thing to try to create something from scratch, especially if some of the pieces (like activity protocols and outcomes) are missing! However, with a bit more training and additional examples, everyone should be able to write more useful and readable reports.

The Tobacco Control Evaluation Center recently hosted a webinar on methods for documenting organizational progress along the way. Speakers shared four different tools your organization can use to capture HOW activities were carried out, WHAT happened as a result, and HOW they informed or supported activities that followed. You can listen to the webinar and access the tools here.

We also hope you’ll use the scores and reviewer feedback to see where your reports may not have communicated as clearly as your project thought it did. See (insert name of other article). Effectively compiling future reports to the new guidelines may also require some fresh ways for evaluators and project staff to communicate and collaborate. Here are more comments from the TCEC survey respondents:

We are re-examining what information we collect and how we document it. There are many narratives that can be told, and we plan to develop new strategies that lift up our impact in the community.

Since reports were written, [our] evaluator has been and will continue to attend more tobacco prevention staff meetings to keep better tabs on current implementation status and push program staff to greater intervention and evaluation plan fidelity.

Yes, we plan to give our reports and data to our external evaluator as soon as we complete them. We also plan to give our track logs to review by both the internal and external evaluator on a monthly basis for review and to ask any questions or get clarification.

If your project has questions about how you can do a better job of documenting your activities and write better reports, let us know what additional training or resources we can provide. TCEC is here to help. After all, we’re all in the same boat!